This morning I ran what I think will be my final set of tests on this. The results settle the question to my own satisfaction. As far as makes any difference in the real world, there's no advantage with the CAI screen termination method over a pigtail.

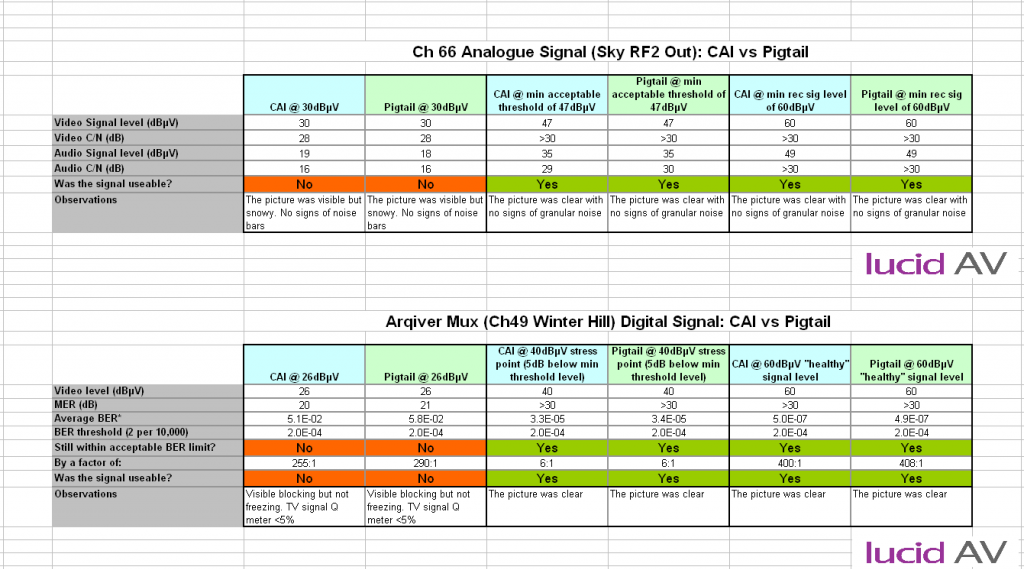

I measured analogue and digital signals at low, medium and high settings: Analogue 30dB was determined by the point at which my Horizon meter would hold a stable signal lock. Analogue 47dB is the drop off (threshold) point. Analogue 60dB is the minimum recommended signal level for acceptable reception. Digital 26dB was the point where the TV began to display blocking from the Arqiver Mux. Digital 40dB is the minimum acceptable signal level for a DVB-T/T2 tuner. Digital 60dB is the mid point of the upper limit of the acceptable signal level limits and represents a strong signal.

The meter displays signal level in 1dB increments. That means for every whole dB displayed there's a subrange of values from .0 to .999 recurring befor the display tips up or down to the next whole dB value. It's sensible then to adjust up and down to the tipping point where the value changes and take two sets of readings to reflect the upper and lower limits of the whole dB point. So that's what I did using a 0-20dB variable attenuator in conjunction with fixed in-line attenuators to bring the signal in to a suitable adjustment range.

Before each set of final readings the meter and attenuators were left to settle for a period of a minute. Any fine adjustments were made if the level reading fluctuated over a tipping point, and then the equipment left to rest again before taking the final C/N and BER readings. I used averaging by noting the high and low points during three 30 second measuring periods over a 5 minute interval. Here are the results

It was suggested by Winston1 that at low signal levels the pigtail would perform significantly worse than a lead made the CAI way. This is the hypothesis being tested.

For analogue at 47 and 60 dBuV the results were identical. No difference, and the pictures looked the same subjectively. The only measured difference was when the signal was rendered unusable at 30 dBuV. Even then, the result was a 1dB signal difference in the audio signal level. When all said and done, the signal was already unusable with both cables, so a 1dB difference in level is pretty academic.

For digital at 40 and 60 dBuV neither connection method significantly outperformed the other. Even at 40 dBuV which is a below par signal level for digital, the predicted huge rise in bit rate error with the pigtail just wasn't apparent. Both cables still provided the set with enough signal and each resulted in a signal well within the performance window required for DVB-T/T2. What minor difference in BER is the equivalent of 1 additional bit error per million bits. Given that the threshold for BER error is 2 bits per 10,000, then the phrase "statistically insignificant" comes to mind. I won't dwell on the fact that the pigtail cable appeared to outperform the CAI method one at 60 dBuV.

The only time that BER rate showed an appreciable difference was when the digital signal was already very broken. If the picture is already pixelating and blocking with an BER of 510 errors per 10,000 bits then does it really matter if another cable is producing 580? I'm sure Winston1 will seize on this as irrefutable proof, but I can't help thinking that if you've already driven the car off the cliff then it's somewhat academic to be worrying about a scuff in the paintwork while plummeting to earth.

Final conclusion, when the chips are down a well made cable is a well made cable. The CAI method may well be superior in some theoretical circumstance or exotic application, but in the real world of consumer TV then is doesn't make any worthwhile difference.